The performance of a system is critical for the user experience. Whether it’s a website, mobile app, or service, users demand fast response times and seamless functionality. Performance testing is a non-functional testing technique that evaluates the speed, responsiveness, and stability of a system under different workloads for different purposes. The primary goal of performance testing is to identify and eliminate performance bottlenecks to ensure that the system meets the expected performance criteria. It is crucial for understanding the performance of the system under various conditions and ensuring that it can handle real-world usage scenarios effectively. From my experience, performance testing is usually underestimated and overlooked, as it is generally only run after big feature releases, architectural changes, or when preparing for promotional events. In this post, I want to explain the foundations of performance testing for the wider engineering community. In a future post, I’ll talk about continuous performance testing.

Performance testing helps in:

- Validating System Performance: Ensuring that the system performs well under expected load conditions.

- Identifying Bottlenecks: Detecting performance issues that could degrade the user experience.

- Ensuring Scalability: Verifying that the system can scale to accommodate increased load, and also decreasing load.

- Improving User Experience: Providing a smooth and responsive experience constantly for end-users to increase loyalty.

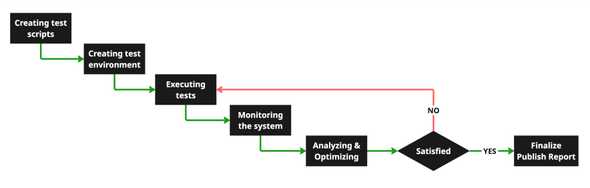

Performance Testing process

Like other software development activities, for performance testing to be effective it should be done through a process. The process requires collaboration with other teams such as business, DevOps, system, and development teams.

Let’s explain the process with a real-world scenario. Imagine Wackadoo Corp wants to implement performance testing because they’ve noticed their e-commerce platform slows down dramatically during peak sales events, leading to frustrated customers and lost revenue. When this issue is raised to the performance engineers, they suspect it could be due to inadequate server capacity or inefficient database queries under heavy load and recommend running performance tests to pinpoint the problem. The engineers begin by gathering requirements, such as simulating 10,000 concurrent users while maintaining response times under 2 seconds, and then create test scripts to mimic real user behavior, like browsing products and completing checkouts.

A testing environment mirroring production is set up, and the scripts are executed while the system is closely monitored to ensure it handles the expected load. After the first test run, the engineers analyze the results and identify slow database queries as the primary bottleneck. They optimize the queries, add caching, and re-run the tests, repeating this process until the system meets all performance criteria. Once satisfied, they publish the final results, confirming the platform can now handle peak traffic smoothly, improving both customer experience and sales performance.

How to Apply Performance Testing

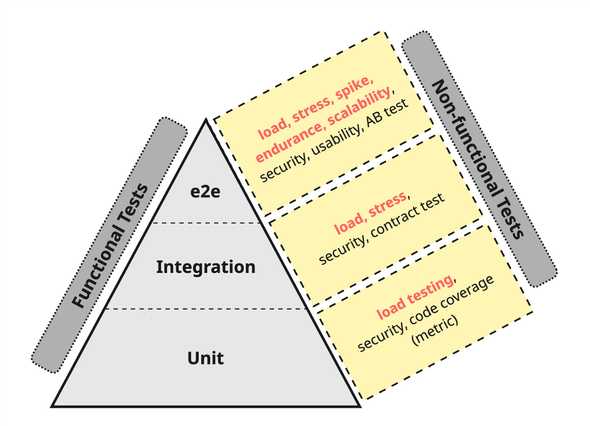

Like functional testing, performance testing should be integrated at every level of the system, starting from the unit level up. The test pyramid traditionally illustrates functional testing, with unit tests at the base, integration tests in the middle, and end-to-end or acceptance tests at the top. However, the non-functional aspect of testing—such as performance testing—often remains less visible within this structure. It is essential to apply appropriate non-functional tests at each stage to ensure a comprehensive evaluation. By conducting tailored performance tests across different levels, we can obtain early and timely feedback, enabling continuous assessment and improvement of the system’s performance.

Types of Performance Testing

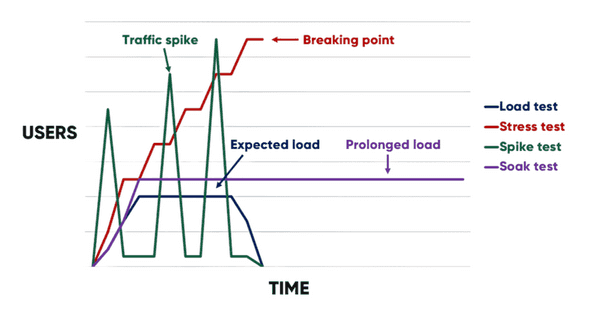

There are several types of performance tests, each designed to evaluate different aspects of system performance. We can basically categorize performance testing with three main criteria:

- Load; for example, the number of virtual users

- The strategy for varying the load over time

- How long we apply performance testing

The following illustrates the different types of performance testing with regards to the three main criteria.

The three main criteria are a good starting point, but they don’t completely characterize the types performance tests. For example, we can also vary the type of load (for example, to test CPU-bound or I/O-heavy tasks) or the testing environment (for example, whether the system is allowed to scale up the number of instances).

Load Testing

Load testing is a basic form of performance testing that evaluates how a system behaves when subjected to a specific level of load. This specific load represents the optimal or expected amount of usage the system is designed to handle under normal conditions. The primary goal of load testing is to verify whether the system can deliver the expected responses while maintaining stability over an extended period. By applying this consistent load, performance engineers can observe the system’s performance metrics, such as response times, resource utilization, and throughput, to ensure it functions as intended.

- Basic and widely known form of performance testing

- Load tests are run under the optimum load of the system

- Load tests give a result that real users might face in production

- Easiest type to run in a CI/CD pipeline

Let’s make it clearer by again looking at Wackadoo Corp. Wackadoo Corp wants to test that a new feature is performing similarly to the system in production. The business team and performance engineers have agreed that the new feature should meet the following requirements while handling 5,000 concurrent users:

- It can handle 1,000 requests per second (rps)

- 95% of the response times are less than 1,000 ms

- Longest responses are less then 2,000 ms

- 0% error rate

- The test server is not exceeding 70% of CPU usage with 4GB of RAM

With these constraints in place, Wackadoo Corp can deploy the new feature in a testing environment and observe how it performs.

Stress Testing

Stress testing evaluates a system’s upper limits by pushing it beyond normal operation to simulate extreme conditions like high traffic or data processing. It identifies breaking points and assesses the system’s ability to recover from failures. This testing uncovers weaknesses, ensuring stability and performance during peak demand, and improves reliability and fault tolerance.

- Tests the upper limits of the system

- Requires more resources than load testing, to create more virtual users, etc.

- The boundary of the system should be investigated during the stress test

- Stress tests can break the system

- Stress tests can give us an idea about the performance of the system under heavy loads, such as promotional events like Black Friday

- Hard to run in a CI/CD pipeline since the system is intentionally prone to fail

Wackadoo Corp wants to investigate the system behavior when exceeding the optimal users/responses so it decides to run a stress test. Performance engineers have the metrics for the upper limit of the system, so during the tests the load will be increased gradually until the peak level. The system can handle up to 10,000 concurrent users. The expectation is that the system will continue to respond, but the response metrics will degrade within the following expected limits:

- It can handle 800 requests per second (rps)

- 95% of the response times are less than 2,500 ms

- Longest responses are less then 5,000 ms

- 10% error rate

- The test server is around 95% of CPU usage with 4GB of RAM

If any of these limits are exceeded when monitoring in the test environment, then Wackadoo Corp knows it has a decision to make about resource scaling and its associated costs, if no further efficiencies can be made.

Spike Testing

A spike test is a type of performance test designed to evaluate how a system behaves when there is a sudden and significant increase or decrease in the amount of load it experiences. The primary objective of this test is to identify potential system failures or performance issues that may arise when the load changes unexpectedly or reaches levels that are outside the normal operating range.

By simulating these abrupt fluctuations in load, the spike test helps to uncover weaknesses in the system’s ability to handle rapid changes in demand. This type of testing is particularly useful for understanding how the system responds under stress and whether it can maintain stability and functionality when subjected to extreme variations in workload. Ultimately, the spike test provides valuable insights into the system’s resilience and helps ensure it can manage unexpected load changes without critical failures.

- Spike tests give us an idea about the behavior of the system under unexpected increases and decreases in load

- We can get an idea about how fast the system can scale-up and scale-down

- They can require additional performance testing tools, as not all tools support this load profile

- Good for some occasions like simulating push notifications, or critical announcements

- Very hard to run in a CI/CD pipeline since the system is intentionally prone to fail

Let’s look at an example again, Wackadoo Corp wants to send push notifications to 20% of the mobile users at 3pm for Black Friday. They want to investigate the system behavior when the number of users increase and decrease suddenly so they want to run a spike test. The system can handle up to 10,000 concurrent users, so the load will be increased to this amount in 10 seconds and then decreased to 5,000 users in 10 seconds. The expectation is that the system keeps responding, but the response metrics increase within the following expected limits:

- Maximum latency is 500ms

- 95% of the response times are less than 5,000 ms

- Longest responses are less then 10,000 ms

- 15% error rate

- The test server is around 95% of CPU usage but it should decrease when the load decreases

Again, if any of these expectations are broken, it may suggest to Wackadoo Corp that its resources are not sufficient.

Endurance Testing (Soak Testing)

An endurance test focuses on evaluating the upper boundary of a system over an extended period of time. This test is designed to assess how the system behaves under sustained high load and whether it can maintain stability and performance over a prolonged duration.

The goal is to identify potential issues such as memory leaks, resource exhaustion, or degradation in performance that may occur when the system is pushed to its limits for an extended time. By simulating long-term usage scenarios, endurance testing helps uncover hidden problems that might not be evident during shorter tests. This approach ensures that the system remains reliable and efficient even when subjected to continuous high demand over an extended period.

- Soak tests run for a prolonged time

- They check the system stability when the load does not decrease for a long time

- Soak testing can give a better idea about the performance of the system for campaigns like Black Friday than the other tests, hence the need for a diverse testing strategy

- Hard to run in a CI/CD pipeline since it aims to test for a long period, which goes against the expected short feedback loop

This time, Wackadoo Corp wants to send push notifications to 10% of users at every hour, starting from 10am until 10pm, for Black Friday to increase sales for a one-day 50%-off promotion. They want to investigate the system behavior when the number of users increase, but the load stays stable between nominal and the upper-boundary for a long time so they want to run an endurance test. The system can handle up to 10,000 concurrent users, so the load will be increased to 8,000 users in 30 seconds and it will be kept busy. The expectation is that the system keeps responding, but the response metrics increase within the following expected limits:

- Maximum latency is 300ms

- 95% of the response times are less than 2,000 ms

- Longest responses are less then 3,000 ms

- 5% error rate

- The test server is around 90% of CPU usage

Scalability Testing

Scalability testing is a critical type of performance testing that evaluates how effectively a system can manage increased load by incorporating additional resources, such as servers, databases, or other infrastructure components. This testing determines whether the system can efficiently scale up to accommodate higher levels of demand as user activity or data volume grows.

By simulating scenarios where the load is progressively increased, scalability testing helps identify potential bottlenecks, resource limitations, or performance issues that may arise during expansion. This process ensures that the system can grow seamlessly to meet future requirements without compromising performance, stability, or user experience. Ultimately, scalability testing provides valuable insights into the system’s ability to adapt to growth, helping organizations plan for and support increasing demands over time.

- Scalability tests require collaboration for system monitoring and scaling

- They can require more load generators, depending of the performance testing tools (i.e. load the system, then spike it)

- They aim to check the behavior of the system during the scaling

- Very hard to run in a CI/CD pipeline since it requires the scaling to be orchestrated

Performance engineers at Wackadoo Corp want to see how the system scales when the loads exceed the upper boundary, so they perform a scalability test. The system can handle up to 10,000 concurrent users for one server, so this time the load will be increased gradually starting from 5,000 users, and every 2 minutes 1,000 users will join the system. The expectation is that the system keeps responding, but the response metrics increase with the load (as before) until after 10,000 users, when a new server should join the system. At which point, we should observe the response metrics starting to decrease. Once scaling up is tested, we can continue with testing the scaling down by decreasing the number of users under the upper limit.

Volume Testing

Volume testing assesses the system’s behavior when it is populated with a substantial amount of data. The purpose of this testing is to evaluate how well the system performs and maintains stability under conditions of high data volume. By simulating scenarios where the system is loaded with large datasets, volume testing helps identify potential issues related to data handling, storage capacity, and processing efficiency.

This type of testing is particularly useful for uncovering problems such as slow response times, data corruption, or system crashes that may occur when managing extensive amounts of information. Additionally, volume testing ensures that the system can effectively store, retrieve, and process large volumes of data without compromising its overall performance or reliability.

- Volume tests simulate the system behavior when huge amounts of data are received

- They check if databases have any issue with indexing data

- For example, in a Black Friday sale scenario, with a massive surge of new users accessing the website simultaneously, they ensure that no users experience issues such as failed transactions, slow response times, or an inability to access the system

- Very hard to run in a CI/CD pipeline since the system is intentionally prone to fail

Wackadoo Corp wants to increase customers, so they implemented an “invite your friend” feature. The company plans to give a voucher to both members and invited members, which will result in a huge amount of database traffic. Performance engineers want to run a volume test, which mostly includes scenarios like inviting, registering, checking voucher code state, and loading the checkout page. During the test, the load will increase to 5,000 users by adding 1,000 users every 2 minutes and they should simulate normal user behaviors. After that heavy write operations can start. As a result, we should expect the following:

- Maximum latency is 500ms

- 95% of the response times are less than 3,000 ms

- Longest responses are less then 5,000 ms

- 0% error rate

- The test server is around 90% of CPU usage

A failure here might suggest to Wackadoo Corp that its database service is a bottleneck.

Conclusion

Performance testing plays a crucial role in shaping the overall user experience because an application that performs poorly can easily lose users and damage its reputation. When performance problems are not detected and resolved early, the cost of fixing them later can increase dramatically, impacting both time and resources.

Moreover, collaboration between multiple departments, including development, operations, and business teams, is essential to ensure that the testing process aligns with real-world requirements and produces meaningful, actionable insights. Without this coordinated effort and knowledge base, performance testing may fail to deliver valuable outcomes or identify critical issues.

There are many distinct types of performance testing, each designed to assess the system’s behavior from a specific angle and under different conditions. Load testing can be easily adapted to the CI/CD pipeline; the other performance testing types can be more challenging, but they can still provide a lot of benefits.

In my next blog post, I will talk about my experiences on how we can apply performance testing continuously.

Behind the scenes

Mesut began his career as a software developer. He became interested in test automation and DevOps from 2010 onwards, with the goal of automating the development process. He's passionate about open-source tools and has spent many years providing test automation consultancy services and speaking at events. He has a bachelor's degree in Electrical Engineering and a master's degree in Quantitative Science.

If you enjoyed this article, you might be interested in joining the Tweag team.