This is the second in a series of three companion blog posts about dependency graphs. These blog posts explore the key terminology, graph theory concepts, and the challenges of managing large graphs and their underlying complexity.

- Introduction to the dependency graph

- Managing dependency graph in a large codebase

- The anatomy of a dependency graph

In the previous post, we explored the concepts of the dependency graph and got familiar with some of its applications in the context of build systems. We also observed that managing dependencies can be complicated.

In this post, we are going to take a closer look at some of the issues you might need to deal with when working in a large codebase, such as having incomplete build metadata or conflicting requirements between components.

Common issues

Diamond dependency

The diamond dependency problem is common in large projects, and resolving it often requires careful dependency version management or deduplication strategies.

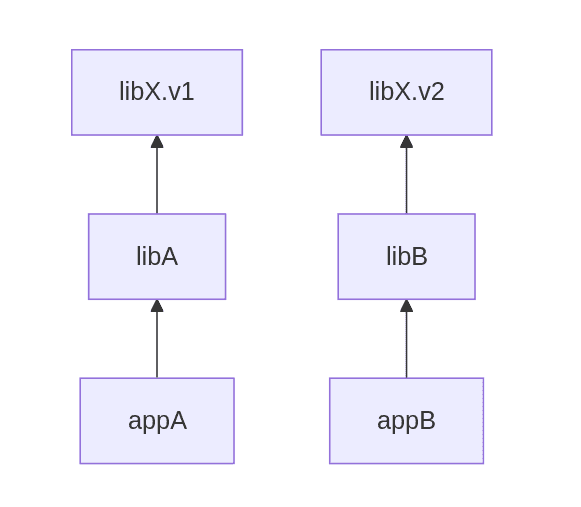

Imagine you have these dependencies in your project:

Packaging appA and appB individually is not a problem

because they will end up having libX of a particular version.

But what if appA starts using something from libB as well?

Now when building appA, it is unclear

what version of libX should be used — v1 or v2.

This results in having a part of the dependency graph looking

like a diamond hence the dependency name.

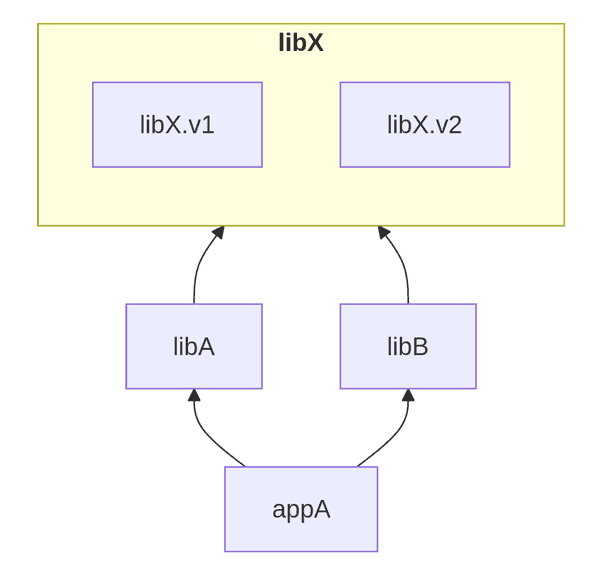

Depending on the programming language and the packaging mechanisms, it might be possible to specify

that when calls are made from libA, then libX.v1 should be used,

and when calls are made from libB, then libX.v2 should be used,

but in practice it can get quite complicated.

The worst situation is perhaps when appA is compatible with both v1 and v2,

but may suffer from intermittent failures when being used in certain conditions such as under high load.

Then you would actually be able to build your application,

and since it includes a “build compatible” yet different version of the third-party library,

you won’t be able to spot the issue straight away.

Some tools, such as the functional package manager nix, treat packages as immutable values and allow you to specify exact versions of dependencies for each package, and these can coexist without conflict.

Having a single set of requirements can also be desirable, because if all the code uses the same versions of required libraries, you avoid version conflicts entirely and everyone in the company works with the same dependencies, reducing “works on my machine”-type issues. In practice, however, this is often unrealistic for large or complex projects, especially in large monorepos or polyglot codebases. For instance, upgrading a single dependency may require updating many parts of the codebase at once, which might be risky and time-consuming. Likewise, if you want to split your codebase into independently developed modules or services, a single requirements set can become a bottleneck.

Re-exports

Re-exports — when a module imports a member from another module and re-exports it — are possible in some languages such as Python or JavaScript.

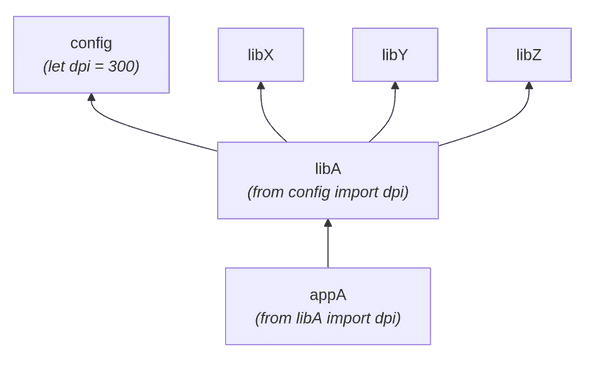

Take a look at this graph

where appA needs value of dpi from the config, but instead of importing from the config,

it imports it from libA.

While re-exports may simplify imports and improve encapsulation,

they also introduce implicit dependencies:

downstream code like appA becomes coupled not only to libA,

but also to the transitive closure of libA.

In this graph this means that changes in any modules

that libA depends on would require rebuilding appA.

This is not truly needed since appA doesn’t really depend on any code members from that closure.

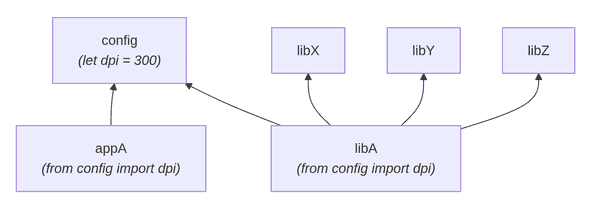

To improve the chain of dependencies, the refactored graph would look like this:

Identifying re-exports can be tricky particularly with highly dynamic languages such as Python. The available tooling is limited (e.g. see mypy), and custom static analysis programs might need to be written.

Stale dependencies

Maintaining up-to-date and correct build metadata is necessary to represent the dependency graph accurately, but issues might appear silently. For example, you might have modules that were once declared to depend on a particular library but do not depend on them any longer (however, the metadata in build files suggests they still are). This can cause your modules to be unnecessarily rebuilt every time the library changes.

Some build systems such as Pants rely on dependency inference where users do not have to maintain the build metadata in build files, but any manual dependencies declared (where inference cannot be done programmatically in all situations) still need to be kept up-to-date and might easily get stale.

There are tools that can help ensuring the dependency metadata is fresh for C++ (1, 2) Python, and JVM codebases, but often keeping the build metadata up-to-date is still a semi-automated process that cannot be safely automated completely due to edge cases and occasional false positives.

Incompatible dependencies

It is possible for an application to end up depending on third-party libraries that cannot be used together. This could be enforced for multiple reasons:

- to ensure the design is sane (e.g., only a single cryptography library may be used by an application)

- to avoid malfunctioning of the service (e.g., two resource intensive backend services can’t be run concurrently)

- to keep the CI costs under control (e.g., tests may not depend on a live database instance and should always use rich mock objects instead).

Appropriate rules vary between organizations, and should be updated continuously as the dependency graph evolves. If you use Starlark for declaring build metadata, take a look at buildozer which can help querying the build files when validating dependencies statically.

Large transitive closures

If a module depends on a lot of other modules, it’s more likely that it will also need to be changed whenever any of those dependencies change. Usually, bigger files (with more lines of code) have more dependencies, but that’s not always true. For example, a file full of boilerplate or generated code might be huge, but barely depend on anything else. Sticking to good design practices — like grouping related code together and making sure classes only do one thing — can help keep your dependencies under control.

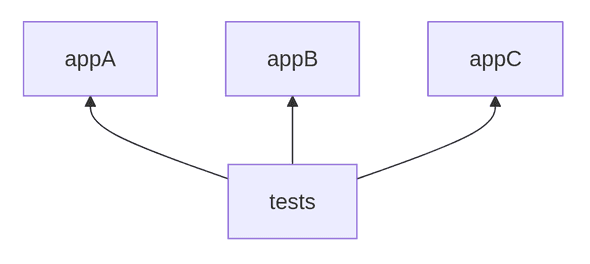

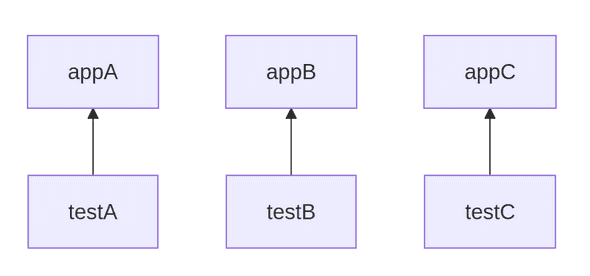

For example, with this graph

a build system is likely to require running all test cases in tests should any of the apps change

which would be wasteful most of the time since most likely you are going to change only one of them at a time.

This could be refactored in having individual test modules targeting every application individually:

Third-party dependencies

It is generally advisable to be cautious about adding any dependency, particularly a third-party one, and its usage should be justified — it may pay off to be reluctant to adding any external dependencies unless the benefits of bringing them outweigh the associated cost.

For instance, a team working on a Python command-line application processing some text data may consider

using pandas because it’s a powerful data manipulation tool

and twenty lines of code written using built-in modules could be replaced by a one-liner with pandas.

But what happens when this application is going to be distributed?

The team will have to make sure that pandas (which contains C code that needs to be compiled)

can be used on all supported operating systems and CPU architectures meeting the reliability and performance constraints.

It may sound harsh, but there’s truth to the idea that every dependency eventually becomes a liability. By adding a dependency (either to your dependency graph, if it’s a new one, or to your program), you are committing to stay on top of its security vulnerabilities, compatibility with other dependencies and your build system, and licensing compliance.

Adding a new dependency means adding a new node or a new edge to the dependency graph, too. The graph traversal time is negligible, but the time spent on rebuilding code at every node is not. The absolute build time is less of a problem since most build systems can parallelize build actions very aggressively, but what about the computational time? While developer time (mind they still have to wait for the builds to finish!) is far more valuable than machine time, every repeated computation during a build contributes to the total build cost. These operations still consume resources — whether you’re paying a cloud provider or covering the energy and maintenance costs of an on-premises setup.

Cross-component dependencies

It is common for applications to depend on libraries (shared code), however, it is also possible (but less ideal) for an application to use code from another application. If multiple applications have some code they both need, it is often advisable that this code is extracted into a shared library so that both applications can depend on that instead.

Modern build systems such as Pants and Bazel have a visibility control mechanism that enforces rules of dependency between your codebase components. These safeguards exist to prevent developers from accessing and incorporating code from unrelated parts of the codebase. For instance, when building source code for accounting software, the billing component should never depend on the expenses component just because it also needs to support exports to PDF.

However, visibility rules may not be expressive enough to cover certain cases.

For instance, if you follow a particular deployment model,

you may need to make sure that a specified module will never end up as a transitive dependency of a certain package.

You may also want to enforce that some code is justified to exist in a particular package

only if it’s being imported by some others.

For example, you may want to prevent placing any modules in the src/common-plugins package

unless they are imported by src/plugins package modules to keep the architecture robust.

Keep in mind that when introducing a modern build system to a large, legacy codebase that has evolved without paying attention to the dependency graph’s shape, builds may be slow not because the code compilation or tests take long, but because any change in the source code requires re-building most or all nodes of the dependency graph. That is, if all nodes of the graph transitively depend on a node with many widely used code members that are modified often, there will be lots of re-build actions unless this module is split across multiple modules each containing only closely related code.

Direct change propagation

When source code in a module is changed, downstream nodes (reverse dependencies of this module) often get rebuilt even if the specific changes don’t truly require it. In large codebases, this causes unnecessary rebuilds, longer feedback cycles, and higher CI costs.

In most build systems (including Bazel and GNU Make), individual actions or targets are invalidated

if their inputs change.

In GNU Make, this would be mtime of declared input files,

and in Bazel, this would be digests, or the action key.

Most build systems can perform an “early cutoff” if the output of an action doesn’t change.

Granted, with GNU Make, the mtime could be updated even if the output was already correct from a previous build

(which will force unnecessary rebuilds), but that’s a very nuanced point.

However, with Application Binary Interface (ABI) awareness, it would only be necessary to rebuild downstream dependencies if the interface they rely on has actually changed.

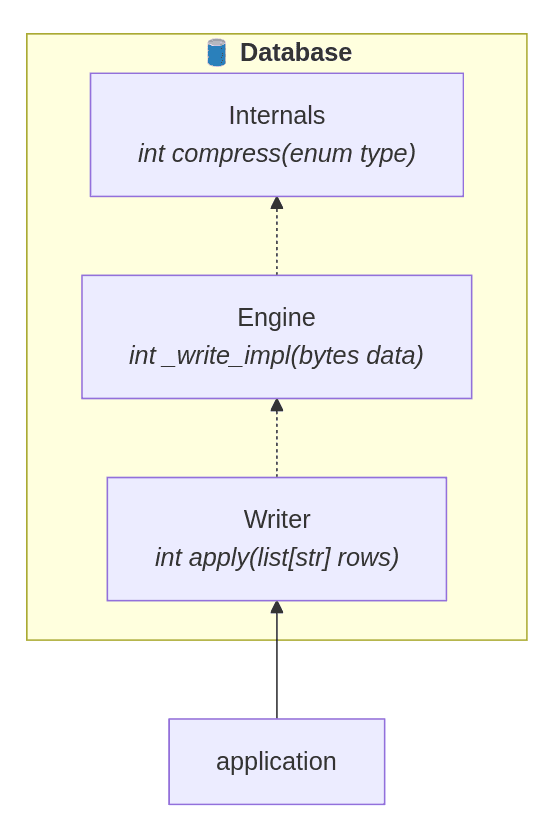

A related idea is having a stable API, which can help figure out which nodes in the graph actually changed. Picture a setup like this — an application depends on the database writer module which in turn depends on the database engine:

This application calls the apply function from the database writer module to insert some rows,

which then uses the database engine to handle the actual disk writing.

If anything in internals changes (e.g., how the data is compressed before writing to disk),

the client won’t notice as long as the writer’s interface stays the same.

That interface acts as a “stable layer” between the parts.

In the build context, running tests of the application should not be necessary on changes in the database component.

Practically, reordering methods in a Java class, adding a docstring to a Python function,

or even making minor changes in the implementation (such as return a + b instead of return b + a)

would still be marking that node in the graph as “changed” particularly if you rely on tooling

that queries modified files in the version control repository without taking into account the semantics of the change.

Therefore, relying on the checksum of a source file or all files in a package (depending on what a node in your dependency graph represents) just as relying on checksum of compiled objects (be it machine code or bytecode) may prove insufficient when determining what kind of change deserves to be propagated further in the dependency chain of the graph. Take a look at the Recompilation avoidance in rules_haskell to learn more about checksum based recompilation avoidance in Haskell.

Many programming languages have language constructs, such as interfaces in Go, that can avoid this problem by replacing a dependency on some concrete implementation with a dependency on a shared public interface. The application from the example above could depend on a database interface (or abstract base class) instead of the actual implementation. This is another kind of “ABI” system that avoids unnecessary rebuilds and helps to decouple components.

How ABI compatibility is handled depends on the build system used. In Buck, there is a concept of Java ABI that is used to figure out which nodes actually need rebuilding during an incremental build. For example, a Java library doesn’t always need to be rebuilt just because one of its dependencies changed unless the public interface of that dependency changed too. Knowing this helps skip unnecessary rebuilds when the output would be the same anyway.

In the most recent versions of Bazel, there is experimental support for dormant dependencies which are not an actual dependency, but the possibility of one. The idea is that every edge between nodes can be marked as dormant, and then it is possible for it to be passed up the dependency graph and turned into an actual dependency (“materialized”) in the reverse transitive closure. Take a look at the design document to learn more about the rationale.

We hope it is clear now how notoriously complex managing a large dependency graph in a monorepo is. Changes in one package can ripple across dozens or even hundreds of interconnected modules. Developers must carefully coordinate versioning, detect and prevent circular dependencies, and ensure that builds remain deterministic, particularly in industries with harder reproducibility constraints such as automotive or biotech.

Failing to keep the dependency graph sane often leads to brittle CI pipelines and long development feedback loops which impedes innovation and worsens developer experience. In the future, we can expect more intelligent tools to emerge such as machine learning based dependency impact analyzers that predict downstream effects of code changes and self-healing CI pipelines that auto-adjust scope and change propagation. Additionally, semantic-aware refactoring tools and “intent-based” build systems could automate much of the manual effort that is currently required to manage interdependencies at scale.

In the next post, we’ll talk about scalability problems and limitations of the dependency graph scope that is exposed by build systems.

Behind the scenes

Alexey is a build systems software engineer who cares about code quality, engineering productivity, and developer experience.

If you enjoyed this article, you might be interested in joining the Tweag team.