The performance of a system is critical to the user experience. Whether it’s a website, mobile app, or service, users demand fast response and seamless functionality. Every change to a system brings the risk of performance degradation, so you should check every commit during development to ensure that loyal users do not face any performance issues.

From my experience, one of the most effective methods to achieve this is with Continuous Performance Testing (CPT). In this post, I want to explain how CPT is effective in catching performance-related issues during development. CPT is a performance testing strategy, so you might benefit from a basic understanding of the latter. A look at my previous blog post will be helpful!

What is Continuous Performance Testing?

Continuous Performance Testing (CPT) is an automated and systematic approach to performance testing, leveraging various tools to spontaneously conduct tests throughout the development lifecycle. Its primary goal is to gather insightful data, providing real-time feedback on how code changes impact system performance and ensuring the system is performing adequately before proceeding further.

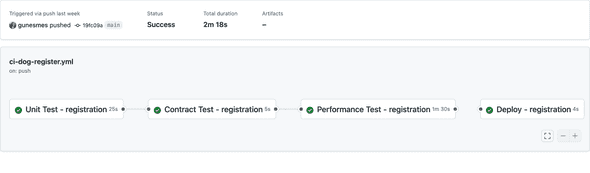

As shown in the example below, CPT is integrated directly into the Continuous Integration and Continuous Deployment (CI/CD) pipeline. This integration allows performance testing to act as a crucial gatekeeper, enabling quick and accurate assessments to ensure that software meets required performance benchmarks before moving to subsequent stages.

A key benefit of this approach is its alignment with shift-left testing, which emphasizes bringing performance testing earlier into the development lifecycle. By identifying and addressing performance issues much sooner, teams can avoid costly late-stage fixes, improve software quality, and accelerate the overall development process, ultimately ensuring that performance standards and Service Level Agreements (SLAs) are consistently met.

To which types of performance testing can CPT be applied?

Continuous performance testing can be applied to the all types of performance testing. However each types has different challenges.

Automated Performance Testing is

- Easily applied to load testing

- Hard to apply to stress and spike tests, but still has benefits

- Very hard to apply to soak-endurance tests

For more details about why the latter two performance testing types are difficult to implement in CI/CD, see the previous blog post.

Why prefer automated load testing?

The load test is designed with the primary objective of assessing how well the system performs under a specific and defined load. This type of testing is crucial for evaluating the system’s behavior and ensuring it can handle expected levels of user activity or data processing. The success of a load test is determined by its adherence to predefined metrics, which serve as benchmarks against which the system’s performance is measured. These metrics might include factors such as response times, throughput, and resource utilization. Given this focus on quantifiable outcomes, load testing is considered the most appropriate, easiest and well-suited type of performance testing type for Continuous Performance Testing (CPT).

How to apply continuous load testing

Strategy

Performance testing can be conducted at every level, starting with unit testing. It should be tailored to evaluate the specific performance requirements of each development stage, ensuring the system meets its capabilities and user expectation.

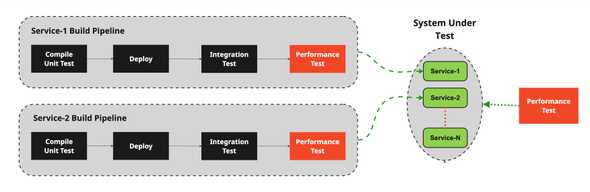

Load testing can be performed at any level—unit, integration, system, or acceptance. In Continuous Performance Testing (CPT), performance testing should start as early as possible in the development process to provide timely feedback, especially at the integration level. Early testing helps identify bottlenecks and optimize the application before progression. When CPT is applied at the system level, it offers insights into the overall performance of the entire system and how components interact, helping ensure the system meets its performance goals.

In my opinion, to maximize CPT benefits, it’s best to apply automated load testing at both integration and system level. This ensures realistic load conditions, highlights performance issues early, and helps optimize performance throughout development for a robust, efficient application.

Evaluation with static thresholds

Continuous Performance Testing (CPT) is fundamentally centered around fully automated testing processes, meaning that the results obtained from performance testing must also be evaluated automatically to ensure efficiency and accuracy. This automatic evaluation can be achieved in different ways. Establishing static metrics that serve as benchmarks against which the current results can be measured is one of them. By setting and comparing against these predefined metrics, we can effectively assess whether the application meets the required performance standards.

The below code snippet shows how we can set threshold values for various metrics with K6. K6 is an open source performance testing tool built in Go and it allows us to write performance testing scripts in Javascript, and it has an embedded threshold feature that we can use to evaluate the performance test results. For more information about setting thresholds, please see the documentation of K6 thresholds.

import { check, sleep } from "k6"

import http from "k6/http"

export let options = {

vus: 250, // number of virtual users

duration: "30s", // duration of the test

thresholds: {

http_req_duration: [

"avg<20", // average response time must be below 2ms

"p(90)<30", // 90% of requests must complete below 3ms

"p(95)<40", // 95% of requests must complete below 4ms

"max<50", // max response time must be below 5ms

],

http_req_failed: [

"rate<0.01", // http request failures should be less than 1%

],

checks: [

"rate>0.99", // 99% of checks should pass

],

},

}With the example above, K6 tests the service for 30 seconds with 250 virtual users and compares the results to the metrics defined in the threshold section. Let’s look at the results of this test:

running (0m30.0s), 250/250 VUs, 7250 complete and 0 interrupted iterations

default [ 100% ] 250 VUs 30.0s/30s

✓ is status 201

✓ is registered

✓ checks.........................: 100.00% 15000 out of 15000

✗ http_req_duration..............: avg=2.45ms min=166.47µs med=1.04ms max=44.52ms p(90)=3.68ms p(95)=7.71ms

{ expected_response:true }...: avg=2.45ms min=166.47µs med=1.04ms max=44.52ms p(90)=3.68ms p(95)=7.71ms

✓ http_req_failed................: 0.00% 0 out of 7500

iterations.....................: 7500 248.679794/s

vus_max........................: 250 min=250 max=250

running (0m30.2s), 000/250 VUs, 7500 complete and 0 interrupted iterations

default ✓ [ 100% ] 250 VUs 30s

time="2025-03-12T12:09:54Z" level=error msg="thresholds on metrics 'http_req_duration' have been crossed"

Error: Process completed with exit code 99.Although the checks and the http_req_failed rate thresholds are satisfied, this test failed because all the calculated http_req_duration metrics are greater than the thresholds defined above.

Evaluation by comparing to historical data

Another method of evaluation involves comparing the current results with historical data within a defined confidence level. This statistical approach allows us to understand trends over time and determine if the application’s performance is improving, declining, or remaining stable.

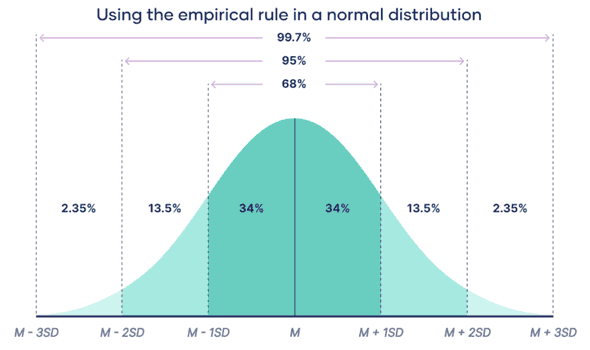

In many cases, performance metrics such as response times or throughput can be assumed to follow a normal distribution, especially when you have a large enough sample size. The normal distribution, often referred to as the bell curve, is a probability distribution that is symmetric about the mean. You can read more about it on Wikipedia.

Here’s how the statistical analysis works: from your historical data, calculate the mean (or average, μ) and standard deviation (SD, σ) of the performance metrics. These values will serve as the basis for hypothesis testing. Then, determine the performance metric from the current test run that you want to compare against the historical data. This could be the mean response time, p(90), error rate, etc.

Define test hyptheses

Concretely, let’s first create an hypothesis to test the current result with the historical data.

-

Null Hypothesis (H0): The current performance metric is equal to the historical mean (no significant difference).

H0:μcurrent=μhistorical -

Alternative Hypothesis (H1): The current performance metric is not equal to the historical mean (there is a significant difference).

H1:μcurrent=μhistorical

Define a comparison metric and acceptance criterion

To compare the current result to the historical mean, we calculate the Z-score, which tells you how many standard deviations the current mean is from the historical mean. The formula for the Z-score is:

Where:

- μcurrent is the current mean.

- μhistorical is the historical mean.

- σhistorical is the standard deviation of the historical data.

Finally, we need to determine the critical value of the Z-score: for a 95% confidence level, you can extract it from the standard normal distribution table. For a two-tailed test, the critical values are approximately ±1.96. For the full standard normal distribution table, see, for example, this website.

The confidence level means that the calculated difference between current and historical performance would fall within the chosen range around the historical mean in 95% of the cases. I believe the 95% confidence level provides good enough coverage for most purposes, but depending on the criticality of the product or service, you can increase or decrease it.

Make a decision

If the calculated Z-score falls outside the range of -1.96 to +1.96, you reject the null hypothesis (H0) and conclude that there is a statistically significant difference between the current performance metric and the historical mean. If the Z-score falls within this range, you fail to reject the null hypothesis, indicating no significant difference.

Based on these findings, you can interpret whether the application’s performance has improved, declined, or remained stable compared to historical data. This statistical analysis provides a robust framework for understanding performance trends over time and making data-driven decisions for further optimizations.

Implementation

In the above section, I tried to provide a clear explanation of how we can effectively evaluate the results of performance testing using historical data.

It is important to note that we do not need to engage in complex manual statistical analyses to check the validity of these results.

Instead, we should focus on scripting a comprehensive process that allows us to test the hypothesis for the Z-score within the 95% confidence level.

This approach will streamline our evaluation and ensure that we rely on a straightforward method to assess the performance outcomes in the CI/CD pipeline.

import numpy as np

from scipy import stats

def hypothesis_test(historical_data, current_data, confidence_level=0.95):

# Calculate historical mean and standard deviation

historical_mean = np.mean(historical_data)

historical_std = np.std(historical_data, ddof=1)

# Calculate the current mean

current_mean = np.mean(current_data)

# Number of observations in the current dataset

n_current = len(current_data)

# Calculate Z-score

z_score = (current_mean - historical_mean) / historical_std

# Determine the critical Z-values for the two-tailed test

critical_value = stats.norm.ppf((1 + confidence_level) / 2)

# Print results

print(f"Historical Mean: {historical_mean:.2f}")

print(f"Current Mean: {current_mean:.2f}")

print(f"Z-Score: {z_score:.2f}")

print(f"Critical Value for {confidence_level*100}% confidence: ±{critical_value:.2f}")

# Hypothesis testing decision

if abs(z_score) > critical_value:

assert abs(z_score) <= critical_value, f"z_score {z_score} exceeds the critical value {critical_value}"

if __name__ == "__main__":

# Read the historical data (performance metrics)

historical_data = get_historical_data()

# Current data to compare

current_data = get_current_result()

hypothesis_test(historical_data, current_data, confidence_level=0.95)The challenges with CPT

CPT can add additional cost to your project. It is an additional step in the CI pipeline, and requires performance engineering expertise that organizations might need to hire for. Furthermore, an additional test environment is needed to run the performance testing.

In addition to the costs, maintenance can be challenging. Likewise, data generation is very critical for the success of the performance testing: it requires obtaining data, masking sensitive information, and deleting them securely. CPT also requires testing new services, reflecting changes in the current services or removing unused services. Following up on detected issues and on new features of performance testing tools are also mandatory. All these must be done regularly to keep the system afloat, adding to existing maintenance efforts.

The benefits of CPT

Continuous Performance Testing offers significant benefits by enabling automatic early detection of performance issues within the development process. This proactive approach allows teams to identify and address bottlenecks before they reach production, reducing both costs and efforts associated with fixing problems later. By continuously monitoring and optimizing application performance, CPT helps ensure a fast, responsive user experience and minimizes the risk of outages or slowdowns that could disrupt users and business operations.

In addition to early detection, CPT enhances resource utilization by pinpointing inefficient code and infrastructure setups, ultimately reducing overall costs despite initial investments. It also fosters better collaboration among development, testing, and operations teams by providing a shared understanding of performance metrics: each test generates valuable data that supports advanced analysis and better decision-making regarding code improvements, infrastructure upgrades, and capacity planning. Finally, CPT offers the convenience of on-demand testing with just one click, providing an easy-to-use baseline for more rigorous performance evaluations when needed.

Conclusion

Continuous Performance Testing (CPT) transforms traditional performance testing by integrating it directly into the CI/CD pipeline. CPT can, in principle, be applied to each performance testing type, but load testing is most advantageous with lower cost and higher benefits.

The core idea is to automate and conduct performance tests continuously and earlier in the development cycle, aligning with the “shift-left” philosophy. This approach provides real-time feedback on performance impacts, helps identify and resolve issues sooner, and ultimately leads to improved software quality, faster development, and consistent adherence to performance standards and SLAs.

Behind the scenes

Mesut began his career as a software developer. He became interested in test automation and DevOps from 2010 onwards, with the goal of automating the development process. He's passionate about open-source tools and has spent many years providing test automation consultancy services and speaking at events. He has a bachelor's degree in Electrical Engineering and a master's degree in Quantitative Science.

If you enjoyed this article, you might be interested in joining the Tweag team.